PyPlr and Pupil Core¶

PyPlr was designed to work with Pupil Core—an affordable, open-source, versatile, research-grade eye tracking platform with high sampling rates, precise model-based 3D estimation of pupil size, and many other features which make it well-suited to our application (see Kassner et al., 2014, for a detailed overview of the system). In particular, we leverage real-time data streaming with the forward facing scene camera to timestamp the onset of light stimuli with good temporal accuracy, opening the door to integration with virtually any light source given a suitable geometry.

The best place to start learning more about Pupil Core is on the Pupil Labs website, but the features most relevant to PyPlr are:

Pupil Capture: Software for interfacing with a Pupil Core headset

Pupil Player: Software for visualising and exporting data

Pupil Core Network API: Fast and reliable real-time communication and data streaming with ZeroMQ, an open source universal messaging library, and MessagePack, a binary format for computer data interchange

PyPlr’s pupil.py module¶

PyPlr has a module called pupil.py which facilitates working with the Pupil Core Network API by wrapping all of the tricky ZeroMQ and MessagePack stuff into a single device class. The PupilCore() device class has a .command(...) method giving convenient access to all of the commands available via Pupil Remote. With Pupil Capture already running, we can make a short recording as follows:

[2]:

from time import sleep

from pyplr.pupil import PupilCore

p = PupilCore()

p.command('R our_recording')

sleep(10)

p.command('r')

[2]:

'OK'

Annotations and notifications¶

To extract experimental events and calculate time-critical PLR parameters (e.g., constriction latency, time-to-peak constriction) we need a reliable indication in the pupil data of the time at which a light stimulus was administered. The Pupil Labs Annotation Capture plugin helps us with this. The plugin allows timestamps to be marked with a label manually via keypress or programmatically via the Network API in a process

that is analogous to sending a ‘trigger’, ‘message’, or ‘event marker’. With PyPlr’s PupilCore() interface, annotations can be generated programmatically with .new_annotation(...) and sent with .send_annotation(...). It is important to make sure that the Annotation Capture plugin has been enabled. You can do this manually in the Pupil Capture GUI or programmatically by sending a notification

message, which is a special kind of message that the Pupil software uses to coordinate all activities. The following example shows how to enable the Annotation Capture plugin programmatically and then send an annotation with the label 'my_event' halfway through a 10 second recording.

[2]:

p = PupilCore()

p.annotation_capture_plugin(should='start')

p.command('R our_recording')

sleep(5.)

annotation = p.new_annotation(

label='my_event',

custom_fields={'whatever':'info','you':'want'})

p.send_annotation(annotation)

sleep(5.)

p.command('r')

[2]:

'OK'

When the recording is finished, we can open it with Pupil Player and use the Annotation Player plugin to view and export the annotations to CSV format. Any custom labels assigned to the annotation will be included as a column in the exported CSV file. By default, the timestamp of an annotation made with the .new_annotation(...) method is set with .get_corrected_pupil_time(...), which gives the current pupil

time (corrected for transmission delay); but this can be overridden at a later point if desired.

Notifications can be used for many things, but there is no single exhaustive document. One way to find out what you can manipulate with a notification is to open the codebase and search for .notify_all( and def on_notify(. Alternatively, if you just want to access the pupil detector properties, there is a handy method:

[19]:

from pprint import pprint

p = PupilCore()

properties = p.get_pupil_detector_properties(

detector_name='Detector2DPlugin',

eye_id=1)

pprint(properties)

{'subject': 'pupil_detector.properties.1.Detector2DPlugin',

'topic': 'notify.pupil_detector.properties.1.Detector2DPlugin',

'values': {'blur_size': 5,

'canny_aperture': 5,

'canny_ration': 2,

'canny_treshold': 160,

'coarse_detection': True,

'coarse_filter_max': 280,

'coarse_filter_min': 128,

'contour_size_min': 5,

'ellipse_roundness_ratio': 0.1,

'ellipse_true_support_min_dist': 2.5,

'final_perimeter_ratio_range_max': 1.2,

'final_perimeter_ratio_range_min': 0.6,

'initial_ellipse_fit_treshhold': 1.8,

'intensity_range': 23,

'pupil_size_max': 100,

'pupil_size_min': 10,

'strong_area_ratio_range_max': 1.1,

'strong_area_ratio_range_min': 0.6,

'strong_perimeter_ratio_range_max': 1.1,

'strong_perimeter_ratio_range_min': 0.8,

'support_pixel_ratio_exponent': 2.0}}

This shows some of the pupil detector properties that can be modified with notifications. Bare in mind that for most use cases it will be best to verify manually in Pupil Capture that all of your most important settings are as they should be.

Getting pupil data in real-time¶

The Pupil Capture continuously generates data from the camera frames it receives from a Pupil Core headset and makes them available via the IPC backbone. PupilCore() has a .pupil_grabber(...) method which simplifies access to these data, empowering users to design lightweight applications that bypass the record-load-export routine of the Pupil Player software. Just specify the topic of interest

and how long you want to spend grabbing data:

[6]:

p = PupilCore()

pgr_future = p.pupil_grabber(topic='pupil.1.3d', seconds=10)

Grabbing 10 seconds of pupil.1.3d

PupilGrabber done grabbing 10 seconds of pupil.1.3d

Of note, .pupil_grabber(...) does its work in a thread using Python’s concurrent.futures framework which means the grabbed data can be accessed via a call to the .result() method of a returned Future object once the work is done:

[7]:

data = pgr_future.result()

data[0]

[7]:

{'id': 1,

'topic': 'pupil.1.3d',

'method': 'pye3d 0.0.6 real-time',

'norm_pos': [0.5360164625892022, 0.6350228570342871],

'diameter': 32.04137148235498,

'confidence': 1.0,

'timestamp': 55693.943421,

'sphere': {'center': [6.045138284134944,

-0.6276101187813217,

49.29280081519474],

'radius': 10.392304845413264},

'projected_sphere': {'center': [130.62958410928272, 92.31126323451176],

'axes': [142.37459004874788, 142.37459004874788],

'angle': 0.0},

'circle_3d': {'center': [0.7818853071011702,

-3.159317419301394,

40.696951453773934],

'normal': [-0.5064567538505914, -0.24361364857743406, -0.8271359904549626],

'radius': 2.0321335156335523},

'diameter_3d': 3.6276776361286016,

'ellipse': {'center': [102.91516081712683, 70.07561144941687],

'axes': [27.30486055019297, 32.04137148235498],

'angle': 32.95347595832888},

'location': [102.91516081712683, 70.07561144941687],

'model_confidence': 1.0,

'theta': 1.8168863457712132,

'phi': -2.120212108874702}

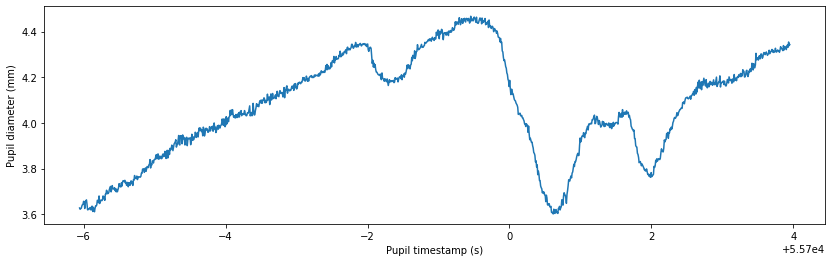

The data are returned as a list of dictionaries, with each dictionary representing a single data point. With the unpack_data_pandas(...) helper function from pyplr.utils, we can organise the whole lot and inspect the pupil timecourse:

[8]:

import matplotlib.pyplot as plt

from pyplr.utils import unpack_data_pandas

data = unpack_data_pandas(data, cols=['timestamp','diameter_3d'])

ax = data['diameter_3d'].plot(figsize=(14,4))

ax.set_ylabel('Pupil diameter (mm)')

ax.set_xlabel('Pupil timestamp (s)')

Timestamping a light stimulus¶

The obvious way to timestamp a light stimulus would be to control the light source programatically and send an annotation as close as possible to when we change the status of the light:

p = PupilCore()

p.command('R')

sleep(5.)

annotation = p.new_annotation('LIGHT_ON')

my_light.on() # turn hypothetical light source on

p.send_annotation(annotation)

sleep(1.)

my_light.off() # now turn it off

sleep(5.)

p.command('r')

But in reality this will be problematic as the light source will have a latency of its own, which is difficult to reference. In fact, our own light source takes commands via generic HTTP requests and has a variable response time on the order of a few hundred milliseconds. Given that we may want to calculate latency to the onset of pupil constriction after a light stimulus, which is typically around 200-300 ms, this variable latency is far from ideal.

To solve the issue and to make it easy to integrate PyPlr and Pupil Core with any light source, we developed a method called .light_stamper(...). This method uses real-time data from the forward facing World Camera to timestamp light onsets based on the average RGB value.

[12]:

p = PupilCore()

p.annotation_capture_plugin(should='start')

p.notify({'subject':'frame_publishing.set_format',

'format':'bgr'})

p.command('R our_recording')

sleep(5.)

annotation = p.new_annotation(label='LIGHT_ON')

timeout = 10

lst_future = p.light_stamper(

annotation=annotation,

threshold=15,

timeout=timeout,

topic='frame.world')

sleep(timeout)

p.command('r')

print(lst_future.result())

Waiting for a light to stamp...

Light stamped on frame.world at 55986.582772

(True, 55986.582772)

Like .pupil_grabber(...), this method runs in its own thread with concurrent.futures so it does not block the flow of execution. The underlying algorithm simply keeps track of the two most recent frames from the World Camera and sends an annotation with the timestamp linked to the first frame where the average RGB value difference exceeds a given threshold. To work properly, the .light_stamper(...) requires a suitable stimulus geometry, an appropriately tuned threshold value, and the

following settings in Pupil Capture:

Auto Exposure Mode of the relevant camera must be set to Manual

Frame Publisher Format must be set to BGR

Annotation Captre Plugin must be enabled

In our testing, .light_stamper(...) flawlessly captures the first frame where a light becomes visible, as verified using Pupil Player and the Annotation Player plugin. Timestamping accuracy therefore is limited only by frame rate and how well the Pupil software is able to synchronise the clocks of the eye and world cameras.

PupilCore(...) also has some other cool features, like a .fixation_trigger(...) method that allows you to wait for a fixation that satisfies criteria relating to dispersion, duration and location of gaze samples. Check out the code for more insights.